Pass me the yellow highlighter

I have talked about how Blender’s Video Sequence Editor (VSE) is pretty useful for editing conference videos (for example) compared to how it used it to be. As an aside, I seem to remember audio desynchronisation was a particularly problematic issue, the workaround being, essentially, don’t make videos with audio in Blender.

Having made highlight videos — mostly in the distant past, the heady days of the 00s — I wanted to give that a shot again. Although it is a time-consuming process the results can be highly entertaining. I used to use a commercial Windows NLE (the name, depending on how you pronounce it, either rhymes with the French word for ‘light’ in British English, or rhymes with ‘veneer’ in American English); but I prefer to use Linux software now. That’s where I get my work done for the past several years, and ideally FOSS because I believe software freedoms are important. Noncommercial is a plus as my budget is very limited. Blender ticks those boxes.

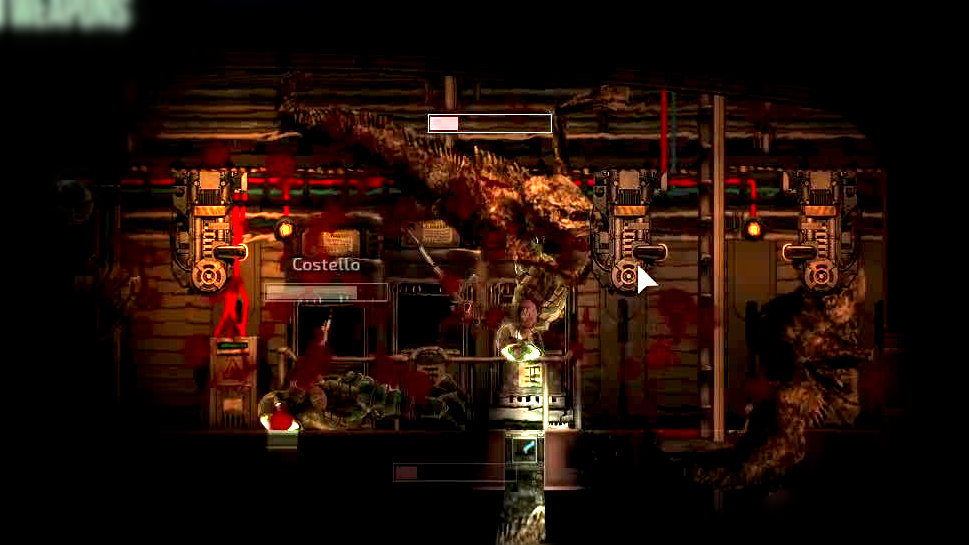

I whipped up a small highlight video demonstrating the obvious dangers of being under great water pressure in the game Barotrauma (see: Steam || Wikipedia):

I quite like the “captions follow speaker” format of moving text as it i) helps the viewer figure out who is speaking and what is going on and ii) can enhance the comedy, if that’s the effect you’re going for.

The problem is it’s time consuming to add those, as compared to just having the clips one after the other with a transition. The time comes mainly from two things:

- creating the text objects – both transcribing and actually using the interface to add, format and position on the tracks

- moving / animating the text with the speaker (ie motion tracking) eg via keyframes

The latter is done well in some commercial software, but it can apparently be done in Blender as well! The Movie Clip Editor can be used for tracking and masking, but I am unfamiliar with it as yet.

The former has two aspects. For text entry, I already experimented with using Google for voice recognition / transcription and the results weren’t useable, although they were funny to read. But even if we have to do the transcribing ourselves, we can certainly speed things up by automating (one of my favourite words) the creation of the text objects in VSE.

Blender is extensible, with a Python API. So it should be possible (!) to write a script which takes transcribed text and creates text objects for it. I’ve done something similar for generating text captions (as images) for shotcut, so I have the concept in my head already; it’s mostly the output format which is changing.

Pingback: Highlight Videos with Blender: A Text Object Generator? – Rob's Blog

Pingback: Quicker Text Editing in Blender (Part 3): Keymaps – Rob's Blog