Working on the backlog

Background

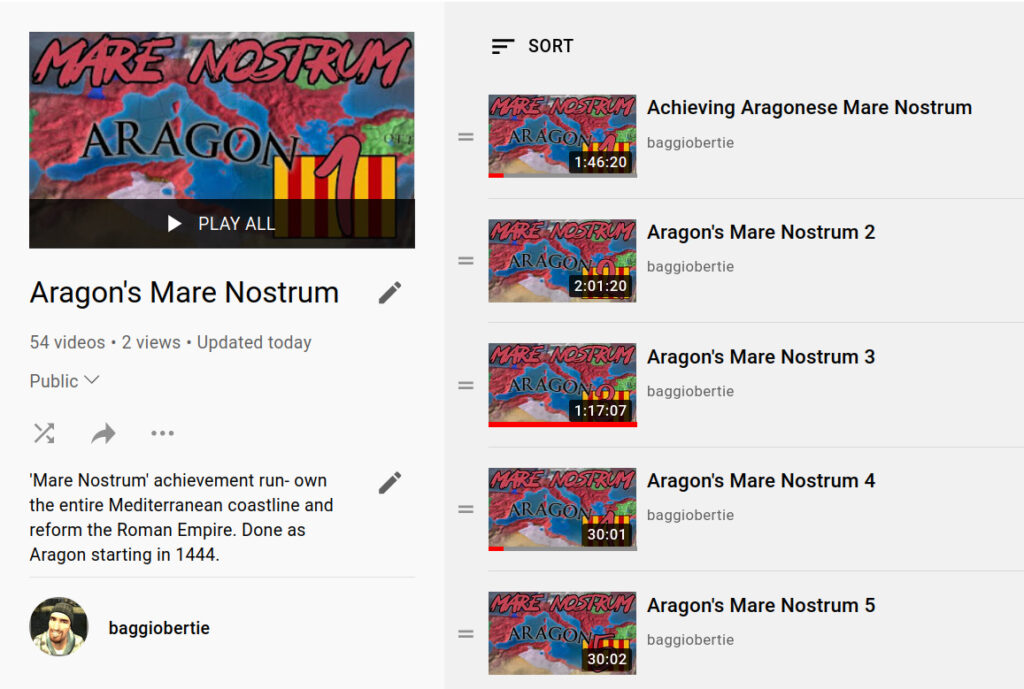

Back in the summer of 2019 I streamed a Europa Universalis IV campaign as Aragon, where I went for the Mare Nostrum achievement (“Restore the Roman Empire and own the entire Mediterranean and Black Sea coast lines.“). It was good fun! I had uploaded some videos to the playlist but didn’t finish it at the time for whatever reason.

To get the videos out the door I had to do a few things:

- split the remaining lengthy videos

- upload those splits to YouTube

- generate json for the new split videos

- generate thumbnails for the videos on YouTube

- make sure the update script works

Each of those steps had tools I have written in the past to automate them, and yet they all had some minor hurdles to overcome!

Splitting the Remaining Videos

Clearly I had planned for this, as I had a couple of bash scripts in the pending footage directory (split.sh and splitmn.sh*). Neither looked quite robust enough for me to trust!

* I’ve just realised ‘mn’ here refers to Mare Nostrum, and not m & n as metasyntactic variables…

† As an aside, the

youtube-automationtools and others are undergoing a reorganisation (WIP), but that’s a separate story

So I went back to splitfootage.py, a trusty script part of my youtube-automation set of tools that was written around the time of processing these EUIV campaigns†.

The script was in decent shape, but I changed the split length to 30 minutes, sorted a small cosmetic bug where the last split had a duration despite not needing it — ffmpeg will run until the end of input if not told otherwise — and some improvement of output.

There were 51 videos after the split, which tallied up nicely with what the directory summary script told me.

Uploading the Split Videos

While I have a script that will upload videos to YouTube, doing so like that absolutely blasts through quota usage, meaning only six or videos per day could be uploaded. So I upload through the browser.

Since having a browser window open to upload all the time is inconvenient if the computer should be off, suspended or booted into Windows; I run a browser on a headless server in a container (LXC under proxmox) using xpra. I seem to remember running xpra pre 1.0 many years ago- this is nothing new!

There’s no real problem here, except a recent update caused me to lose the ability to scroll, then I dived down a rabbit hole of trying to get the latest version compiled (eventually resolved) rather than use the distro / community repo version. That sucked up more time than I expected, though it was good to submit a PR and get compiling sorted for xpra with ffmpeg post-4.4.

Unfortunately that didn’t restore the scrollwheel, but that’s hardly a deal-breaker.

Generate JSON for New Videos

My main YouTube upload / update script deals with input in JSON format. I used to work with CSV/TSVs for my own tools, but JSON is so much better- it’s more flexible and much less error-prone.

As part of my bertieb-video-tools (can you see why a reorganisation is due?) set of tools I have a script, genjson.py, which is a genericised version of a bunch of “one-off” scripts to generate JSON. This one takes a template which specifies common titles, descriptions, numbering and scheduling. I quite like it as a system!

However that too has accumulated features in a slightly… crufty way. Not only does it generate JSON, it can also update the JSON files it has generated. Those features should really be separated out, and made more obvious how to invoke.

That said, once I’d figured out how to properly run my own tool it did a grand job. The related script get_ids.py fetched the ids of the video files that had been uploaded to YouTube and added that information to the JSON file. Doing so makes the main YouTube script work in ‘update’ mode, rather than ‘upload’ mode.

Generate Thumbnails for Videos

Thankfully I had already created a template for the series thumbnails:

However, I had manually done the thumbnail for the first three videos already on YouTube. That’s definitely not a convenient method for the next 51 thumbnails!

ImageMagick to the rescue:

convert marenostrum-numberless.png -gravity SouthEast -font BeyondTheMountains -fill "#c54353" -pointsize 550 -stroke black -strokewidth 15 -annotate +225-100 "4"

We’re using the -annotate option to write the video number in the bottom-right (SouthEast) in a rather large fontsize (550!) with a very thick stroke (15!!).

Since it could be done I just needed to wrap that in a script which would do it:

- for all 51 video files

- starting from 4

- moving the number offset slightly for double digits

- outputting a jpeg with a filename that matched the parent video

The last requirement might seem odd at first glance. The reason for that is my YouTube update script can generate thumbnails on-the-fly using game-specific thumbnailers; however it also has the ability to use pre-generated thumbnails where it looks for a file matching the video file in question except with a jpg extension. For example: 2019-07-25 07-39-19.mkv and its thumbnail 2019-07-25 07-39-19.jpg.

The script:

#!/env python3

"""write thumbnails for Mare Nostrum campaign"""

# marenostrum-thumbnails.py - generate thumbnails for Mare Nostrum campaign

# Starting from 4, loop through mkvs in video dir and create thumbmnail

# for each video file

import json

from pathlib import Path

import subprocess

BASEIMG = "/home/robert/images/marenostrum-numberless.png"

def generate_image(number, videofile):

"""ImageMagick `convert' BASEIMG with number"""

# convert marenostrum-numberless.png -gravity SouthEast

# -font BeyondTheMountains -fill "#c54353" -pointsize 550

# -stroke black -strokewidth 15 -annotate +75-100 "27" - | display

offset = 75 if number >= 10 else 225

outputimg = videofile.replace(".mkv", ".jpg")

magick_args = ["convert", BASEIMG, "-gravity", "SouthEast",

"-font", "BeyondTheMountains", "-fill", "#c54353",

"-pointsize", "550", "-stroke", "black",

"-strokewidth", "15",

"-annotate", "+{}-100".format(offset),

str(number),

outputimg]

print(" ".join(magick_args))

subprocess.run(magick_args, check=False)

if __name__ == "__main__":

with open("euiv-marenostrum.json", "r") as fh:

jin = json.load(fh)

videodir = Path(jin["filesdir"])

videos = list(videodir.glob("*.mkv"))

num = 4

for video in sorted(videos):

generate_image(num, str(video))

num += 1

It worked quite nicely!

Updating Using YouTube Update Script

The final minor stumbling block was, oddly enough, my heavily-used main YouTube upload/update script.

I’ve started using pyenv, it’s handy but it also has issues with system libraries. Prior to properly using virtual envs, like most I installed modules and libraries globally. That’s fine, except the pyenv shim seems to remove the system-installed libraries (/usr/lib/python3.9/site-packages/) from PATH.

So I would get a complaint about module not found: pyfoolib, despite that existing in /usr/lib/python3.9/site-packages/. More annoyingly, trying to install the package to the local pyenv-managed site-packages directory, pip would complain that requirement already satisfied since it could see the package in the system library directory.

I couldn’t find the official recommendation on how to handle that, and pyenv doesn’t seem to have chat-based support; so I told pip to ignore the installed packages. From pip help install:

I, --ignore-installed Ignore the installed packages, overwriting them. This can break your system if the existing package is of a different version or was installed with a different package manager!That let pip install the package to the pyenv-shimmed directory.

Of course I then had an issue I had before with the youtube-upload script:

AttributeError: module 'oauth2client' has no attribute 'file'This is a longstanding issue. The workaround, adding from oauth2client import file to the package’s main.py worked fine again. I had to redo my authentication again*, but after that it worked fine!

* for some reason one of my YouTube API projects has a maximum quota of 0. Zero is not a lot of quota. Thankfully another project has the default 10 000, which is a bit more helpful.

Once that was sorted, all was done!