pandoc micro tip: disabling line wrap / newlines

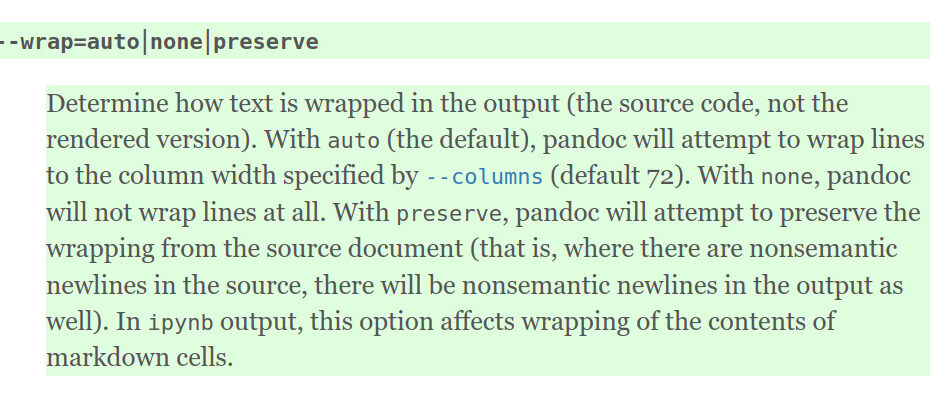

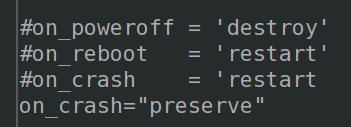

January wrap-up Since this doesn’t appear in my own previous ‘tips to myself‘ I’m documenting it to myself here: The option for disabling wrapping is:… Read More »pandoc micro tip: disabling line wrap / newlines